When most people think software, they think things like MS Word and Mozilla Firefox. This are pieces of software, yes, but they are not what "software" is anymore then Shakespeare's "Romeo and Juliet" is "English". This comparison is almost exactly analogous to the situation in software.

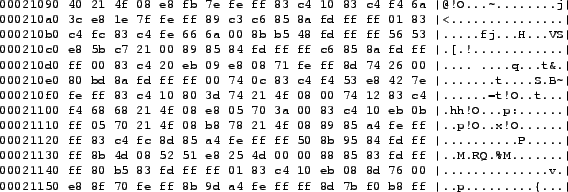

At the most basic level, software is a sequence of numbers, just as written English is a series of glyphs and spaces. These numbers look something like this:

- 2558595091

- 1073777428

- 1382742636

These are "32-bit" numbers; other size numbers are possible. (For more details on this, consult The Art of Assembly Language, particularly the part about the binary number system, which should be relatively easy to understand.)

These numbers have no intrinsic meaning by themselves. What we can do is choose to encode meaning into them. Very simply, certain parts of the number can mean that we should do certain things. We might decide that if the number starts with 255, it will mean "subtract", and when we have a subtraction, the next three numbers indication what numbers to subtract from, the next three numbers indicate what number to subtract, and the last number what bin to put the result in. So 255,858,509,1 (odd commas are deliberate) might mean "subtract 509 from 858 and stick the result in memory bin #1", which would place 349 in bin #1. (Computer people will please forgive me for using decimal; even Turing did it in "Can Machines Think?".) Other numbers might instruct the computer to move numbers from bin to bin, or add numbers, put a pixel on the screen, jump to another part of the sequence of numbers, and all kinds of other things, but since we have a fairly limited set of numbers to work with, all of these things tend to be very small actions, like "add two numbers and store the result", rather then large actions, like "render a rocket on the screen", which even in the simplest form could require thousands of such smaller instructions.

|

The power of computers lies in their ability to do lots of these very small things very quickly; millions, billions, or even trillions per second. Software consists of these instructions, millions of them strung together, working together to create a coherent whole. This is one of the reasons software is so buggy; just one tiny bit of one of these millions of numbers can cause the whole program to crash, and with millions of numbers, the odds that one of them will be wrong are pretty good. There are many, many ways of making the numbers mean something, and pretty much every model of CPU uses a different one.

However, it is not enough that the computer understands these numbers and what they mean. If only the computer understood these numbers, nobody could ever write programs. Humans must also understand what these numbers mean.

In fact, the most important thing about these sets of numbers is that humans understand them and agree on what they mean. There are ways of defining numbers that no real-world computers understand. Some of these are used for teaching students, others are used by scholars to research which ways work better for certain purposes, or to communicate with one another in simple, clear ways that are not bound up in the particular details of a specific computer. If no human understands the way that numbers are being given meaning, then there is no usefulness to humans.